Get notified of the latest news, insights, and upcoming industry events.

Panel – Adopting Modern Data Fabrics and Cloud in Trading Technology Infrastructure

Speakers:

- Peter Williams, FIA, Chief Technology Officer, CJC Ltd.

- Richard Balmer, Senior Director of Network Product Strategy, IPC Systems.

- Naomi Clarke, Data Innovation & Strategy, CDO Advisory.

- Bob Mudhar, Senior Director, Client Account Manager & Head of UK Engineering, Synechron.

Moderator: Andrew Delaney, President & Chief Content Officer, A-Team Group.

At the A-Team’s TradingTech Briefing hosted by London Marriott Hotel Canary Wharf on the 21st of September, a panel discussed modern data fabrics and cloud trading technology infrastructure. The narrative was framed by 3 topics (below) and engaged the audience through live polls, or Q&A via Slido, an event-specific mobile application.

Topics Covered:

- Describe the current state of data infrastructure across capital markets and its limitations.

- Modern data infrastructure components and capabilities, and data fabric objectives.

- How to overcome the challenges of building modern infrastructure mesh.

Source: CJC – Adopting Modern Data Fabrics and Cloud in Trading Technology Infrastructure Panel (Sept 2023)

Current State of Capital Market Data Infrastructures and Limitations

State of Capital Market Data Infrastructures

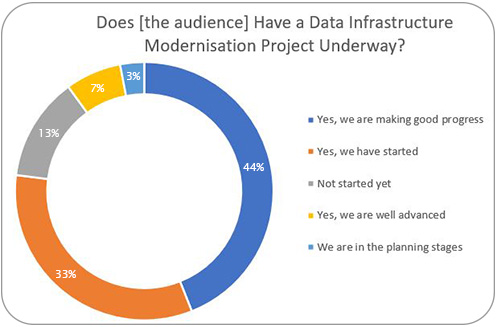

Source: CJC – A-Team Audience Poll Question 1

Opening the discussion, the panel described the industry as being in flux with capital market data infrastructure potentially on the cusp of seismic changes due to cloud and other emerging technologies. Historically from an infrastructure perspective, data infrastructures have been complex and their importance tended to be forgotten from a strategic standpoint. Noting the panel’s optimism, organisations were now actively monitoring trading technology trends, resulting in modular hybrid-cloud-type architecture deployments (as illustrated in a previous article).

Current Market Data Infrastructure Limitations

Despite the optimism, the panel highlighted that overarching limitations were driven by how clearly a business understands its objective, which correlates with outcomes and understandably varies between organisations. Taking cloud-based infrastructure as an example, it was pointed out that organisations tended to have a cost-control focus towards cloud adoption, building in the cloud initially and then back on-premise once they reach a certain scale or point where further scaling cost benefits become unviable.

Another limitation highlighted was that communicating with market data stakeholders (developers, system integrators, and data consumers) is often overlooked, which could potentially reduce barriers to entry and that legacy technology could be a barrier hindering progress. The panel urged institutions to review legacy technology and APIs used and consider absorption, or a form of abstraction to enable side-by-side usage with modernised technology.

Observing that organisations tended to have better success when the data was recognised to help generate revenue by the business, which often led to business data management functions and staff marketplace-like data catalogues in performant data infrastructures being created. To summarise potential limitations, a panellist listed 3 likely factors to limit progress:

- Business silos – organisational or merged business products.

- Fragmented technology – a consequence of silos, building or endless wrangling.

- Limited off-the-shelf integrations and interoperability between technology and machine learning/artificial intelligence (ML/AI).

Modern Data Infrastructure Components & Capabilities, and Data Fabric Objectives

Data Governance – Traceability & Integration

The panel agreed that data traceability is currently an industry challenge and, to comply with data governance, transparent data traceability is vital when assessing new business technologies – leading to observability later. To comply with data governance, businesses must understand their data and the requirements (security, accessibility, and regulatory compliance) before comparing where they are, likely in fragmented market data systems, versus where the business wants to be.

The panel agreed that data traceability is currently an industry challenge and, to comply with data governance, transparent data traceability is vital when assessing new business technologies – leading to observability later. To comply with data governance, businesses must understand their data and the requirements (security, accessibility, and regulatory compliance) before comparing where they are, likely in fragmented market data systems, versus where the business wants to be.

On fragmentation, the panel points out that “the big missing piece is integration.” The panel believed the crux of this challenge is metadata, which is difficult to communicate from a business perspective but technologically provides an abstraction for applications to leverage and build on as the heart of an abstraction layer.

Standardisation & Data Analytics

Whether it’s on-premise or in the cloud, to build on an abstraction layer, standardised distribution and ingestion connectors with consistent processes around data products are required to utilise metadata, which connects the underlying physical storage across applications to avoid unnecessarily duplicating data (see inventory management here). As the data accrues incrementally, the panel surmises that translating analytics into business terminology may potentially prove challenging. They advise that the business ultimately wants to know what the data is and whether they can easily extract it.

In summary, leverage metadata-driven data to achieve interoperable flexibility, then aim for self-service environments.

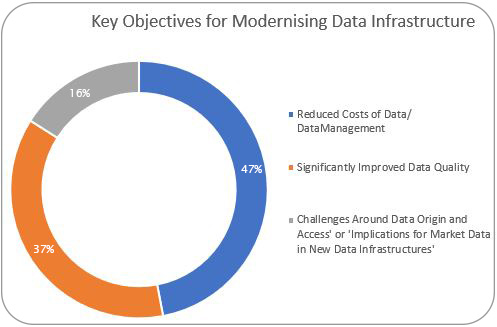

Audience Poll Questions: Key Data Infrastructure Modernisation Objectives (Multi-Answer)

Source: CJC – A-Team Audience Poll Question 2

Responding to the moderator and audience poll, panellists suggest modernising data infrastructures can indeed reduce costs AND improve data quality, but organisations must be prepared to start from scratch by replacing all legacy technology. Alternatively, organisations should be prepared to make some trade-offs.

However, the panel agree progressive modernisation is an option otherwise legacy technology can make innovating difficult. It was suggested to start with products offering relative levels of safety and consistently apply basic rules of quality control.

Furthermore, the panel agrees a modular architecture (data pipeline, aggregation, normalisation, and distribution layers) is the preferred starting point, as opposed to pre-built purchased deployments, so organisations can easily connect and disconnect their infrastructure as required to enable maximum value extraction.

How to Overcome The Challenges of Building Modern Infrastructure Mesh

Building A Data Fabric Foundation

Strategically, misalignment between business management making investment decisions and the implementation team is another challenge. A collaborative approach is advised for superior outcomes, enabling an accountable and deliverable project, involving dual-way communications around data governance, metadata management and data engagement. The panel acknowledges it is potentially a difficult process, particularly conceptual conversations on operational risk, advising to summarise pain points and leveraging analytics to illustrate quick wins or proofs-of-concept.

From an infrastructure perspective, the panel highlighted a “spaghetti mess of connectivity” which hinders data governance, and that organisations frequently do not have sound audits of data sources. Consequentially it means the inflow of consumed data is often duplicated by multiple business units and is not cost-efficient (sound familiar? See CJC’s Commercial Management).

Regarding the cloud, it was acknowledged that cloud migration and integration could be challenging and multi-cloud, more so. On migration and integration, functionalities like multicast “is not going to go anywhere anytime soon” (see how CJC challenged some multicast distribution myths with AWS). Any innovations aiming to replace multicast must allow a fair setup for trading and exchanges. The panel emphasized the data placement flexibility that modular hybrid-cloud architectures provide, highlighting the cloud as an avenue to scale operations to a certain point until scaling cost benefits became unviable. Ideally, it was advised organisations should have an equivalent or mirror-type environment and keep some operations on-premise.

Interestingly, during the previous question, it was noted that when leveraging public cloud platforms uploading the data was relatively easy but less so to extract due to the cloud provider’s commercial and consumption interests (compute memory and in-tune networking) in moving workloads closer to the cloud.

On multi-cloud, as mentioned in an earlier article, each cloud deployment and provider had its respective differences. Developing multiple cloud environments to function interoperably and uniformly is extremely difficult, which circles back to the earlier standardisation points and subsequently creates a fractional layer. Fundamentally, simplified data structures are the value proposition and the starting point has to be data consumption.

On multi-cloud, as mentioned in an earlier article, each cloud deployment and provider had its respective differences. Developing multiple cloud environments to function interoperably and uniformly is extremely difficult, which circles back to the earlier standardisation points and subsequently creates a fractional layer. Fundamentally, simplified data structures are the value proposition and the starting point has to be data consumption.

An interlinked but related challenge is observability and controllability, which are vital to holistically monitor, control and maintain on-premise and cloud-based systems (see mosaicOA). According to the panellist, the scariest question for an operations team is “Where is my message, now?!” – a trade confirmation message could be worth millions. Running an on-premise system, teams have complete control of switches with a finer rate of control and observability compared to multiple platform distributions. This circles back to the business objectives of modernising the data infrastructure and agreed trade-offs. Do operators enable cost savings at the expense of answering that question? Alternatively, answering may be possible with additional investments, changes or innovations.

Buying A Data Fabric Foundation

Regarding the need to build versus buying pre-built data fabric foundations, one panellist noted the latter allowed organisations to enter at a fairly high level of integration but (reverting to observability) blindspots and some key learning will be inevitable. The panel ultimately agreed that buying was an option if certain conditions were met (below), otherwise, building bespoke modular architectures may generate better long-term ROI. Decisions must be driven by business objectives and technology toolkits chosen accordingly – consider how integration will work before starting.

- Pre-built systems were aligned with business objectives.

- Commercial terms were acceptable.

- The organisation was comfortable committing to future external technology decisions.

About CJCCJC is the leading market data technology consultancy and service provider for global financial markets. CJC provides multi-award-winning consultancy, managed services, cloud solutions, observability, and professional commercial management services for mission-critical market data systems. CJC is vendor-neutral and ISO 27001 certified, enabling CJC’s partners the freedom to focus on their core business. For more information, contact us or: Email: marketing@cjcit.com

|

Get In Touch

Get in touch with our experts to learn how we can help you optimise

your market data ecosystem!